I worked at Sparkart during college building high traffic LAMP (Linux, Apache, MySQL, PHP) web sites for major record labels and artists including Linkin Park, Korn, Static-X, Rob Zombie and Josh Groban. My two roommates and I built the first version of the LP Underground official fan club website for Linkin Park.

By my final year at the University of Washington I was taking a full course load of Computer Science and Engineering classes and working nearly full time for Sparkart. I was having a blast and learning so much!

Database Driven Websites

Sparkart mainly built “database driven websites” for clients such as popular bands. The websites consisted of a MySQL database and PHP web servers.

MySQL Databases

The MySQL databases typically had things like:

- News

- Events

- Tour Dates

- Album Info

- Users

- Admins

- Permissions

PHP Front Ends

And then webservers running PHP code that accessed the MySQL database to display the content on a web page.

Some of the things I did included:

- Designing MySQL Schemas

- Writing the PHP code to retrieve data from MySQL and display it

- Taking a Photoshop design from our designers and turning it into pixel perfect HTML and CSS

Content Management Systems

One of the key features for most of the websites we built were backend content management systems that our clients could use to manage the content on their websites. This was via my.sparkart.com. It would allow the band manager (or whoever) to go edit things like:

- News

- Events

- Tour Dates

Other Services

vBulletin

Most of the websites included a message board powered by vBulletin. I spent a lot of time maintaining those messages boards including:

- Upgrading vBulletin any time there was a new release

- Updating styling every time the main website changed

- Tracking down and tuning slow MySQL queries

The message boards for sites like Linkin Park were very active! We would have to periodically go through and archive old posts to bring performance back under control.

Internet Relay Chat

A few of the band websites had a chat feature where you could talk with other fans. The backend for this was an IRC daemon and the front-end was a Java Applet that ran embedded on the websites.

Webmail

We hosted email for a bunch of our clients and a web front-end provided via TWIG.

Domain Name System

We hosted our own Domain Name System (DNS) Servers. Sparkart was using BIND when I started which I found unnecessarily complicated. The configuration was complex and updating records required multiple steps (did you remember to increment the serial?!). It was not very pleasant to work with.

At some point I took over managing DNS and switched us over to djbdns’s tinydns which was much simpler and easier to work with. We could simply rsync records across our two DNS servers without any of that BIND Zone Transfer AXFR nonsense.

I also setup djbdns’s dnscache as our local DNS resolver and cache which was also easy to setup.

Wearing Multiple Hats

I love wearing multiple hats! I’ve never just been a Software Engineer.

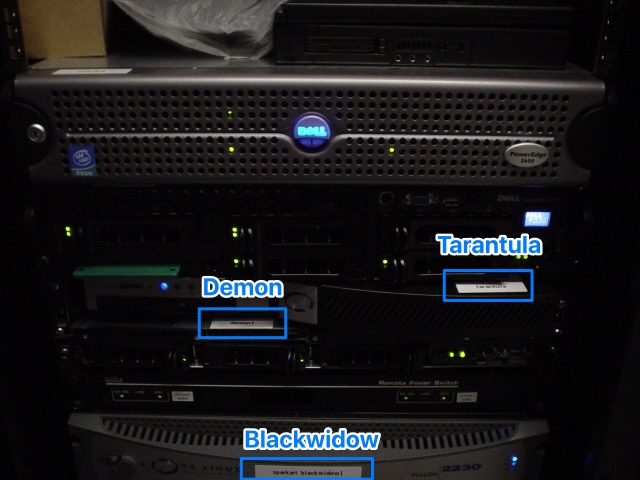

I started off doing PHP development and eventually ended up also maintaining the dozen or so Linux and FreeBSD servers, load balancers and routers that were co-located in an Equinix Data Center in San Jose.

Here are some of the hats I was wearing at Sparkart along with what those hats involved:

- Software Engineer

- Designing MySQL schemas

- Building PHP Websites

- Converting Photoshop mock-ups into pixel perfect HTML & CSS

- System Administrator

- Maintaining Linux & FreeBSD servers

- Setting up new servers

- Troubleshooting problems on servers

- Maintaining DNS, vBulletin, DNS, etc.

- Database Administrator

- Managing database servers and configuration

- Tuning MySQL queries

- Network Administrator

- Managing Switches, Routers and Load Balancers

Linux and FreeBSD Servers

Sparkart had a mix of Linux and FreeBSD Servers when I started working for them. Over time we retired the FreeBSD servers in favor of Linux.

Routers and Load Balancers

That time I locked everybody out of the Sparkart network. Oops!

I had a ton of fun playing around with and tweaking our configuration on the Sparkart routers and load balancers. Of course, making changes was always risky because you had the ability to disrupt production traffic and a wrong change on the router could lock you out of the network.

So, of course, that happened at least once. Fortunately it usually only took a reboot of the router to restore the previous working configuration. That required calling up the data center and requesting “remote hands” service where somebody would go over to your rack and do whatever you told them to do with the equipment. Sometimes that meant having them hookup a monitor and keyboard to a non-responsive server and then trying to guide them through typing in commands. In the simpler case it just required them to physically reboot a server or piece of networking gear if we couldn’t do that remotely ourselves.

Performance Improvements

I’ve always been obsessed with performance.

I made a number of performance improvements during my time at Sparkart. We had quite a bit of traffic across all of the websites we ran so performance improvements were very noticeable either in terms of a drop in load across the servers or faster page load times.

Alternative PHP Cache (APC)

One of the largest performance improvements was installing and using the Alternative PHP Cache (APC). APC used shared memory to cache the PHP byte code to avoid re-parsing a PHP file on every request. This alone provided a huge performance boost for our websites and all of our vBulletin instances.

APC also let you use the shared memory cache to store and retrieve your own keys. So I was able to also use it to cache things like MySQL query results which provided additional performance improvements.

Why Shared Memory?

Back in the days of Apache httpd 1.3 and mod_php, requests would be handled by a pool of httpd processes that had been forked from the main parent process. If the separate processes needed to share a cache then shared memory was one way to handle that.

MySQL Query Tuning

I spent a lot of time using MySQL’s EXPLAIN to figure out how SQL queries were being executed and using that to tweak the queries and/or add indexes to speed them up.

C Module for PHP

I wrote a c module for PHP that included all of the initialization code that we ran on each page. This included things like determining what site was currently being accessed, loading configuration files, setting up environment variables, etc. The PHP version took somewhere between 50-100ms and the c version was closer to 5-10ms providing a nice performance improvement for just about every page load.

The c module also had an advantage of allowing me to define a “superglobal” variable, $SPARKART, which contained all of the site configuration for the current request. It was a little shorter than needing to go through $GLOBALS and wasn’t something you could do in pure PHP:

$SPARKART['site']vs$GLOBALS['sparkart']['site']

Weird Network Routing

That time I spent the entire day on the phone troubleshooting why customers couldn’t access the Linkin Park website from a major ISP.

We had a weird issue one time where some users were having problem accessing the Linkin Park website.

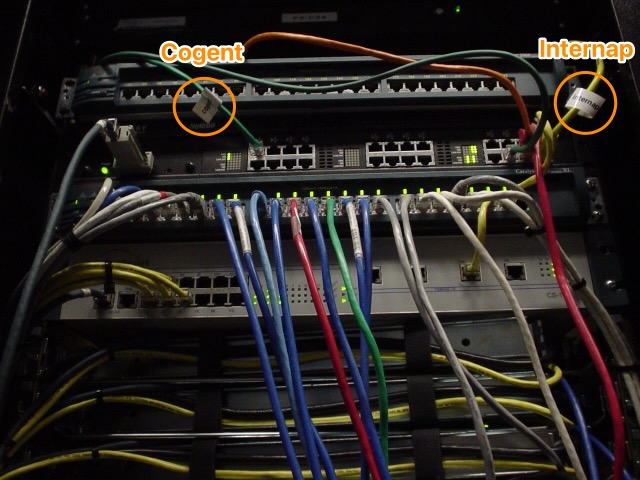

Background

Sparkart had peering agreements with Internap and Cogent providing somewhat redundant network paths. The Sparkart IP blocks were only publicly announced via Internap and not via Cogent. So all inbound traffic arrived via the Internap path. The Sparkart router was configured to send traffic out the Cogent path by default with a fallback to Internap if there was something wrong with Cogent.

This setup never fully made sense to me but I think the idea was that Internap was the more expensive and higher quality bandwidth while Cogent was the cheaper and possibly less reliable provider. Requests (which were smaller than responses) would come in through the higher quality path and responses (larger than the requests) would go out the cheaper lower quality path.

Troubleshooting

At one point we had some users complaining about not being able to access the Linkin Park website. My troubleshooting processing went something like:

- Call up the Cogent, they point the finger at Internap.

- Call up the Internap, they point the finger at the ISP.

- Call up the ISP, they point the finger at Cogent and/or Internap.

- Conference call Cogent, Internap and the ISP to get everybody talking.

- Somebody finally notices the

!Xin my traceroute indicating “communication administratively prohibited” (i.e. that hop was deliberately dropping the packets) - We determine the ISP made a recent security change to drop traffic from IPs that were not being announced over a link.

The Cause

There were two problems with this Cogent/Internap setup:

- It wasn’t fully redundant since the IP blocks weren’t announced via the Cogent path. If our Internap link was down then no requests made it into our network. Internap was a single point of failure!

- Since our IPs were not announced via Cogent some ISPs started blocking our outbound traffic as invalid (the problem mentioned above).

Short Term Solution

I forget what the exact short term fix was. It was either:

- Cogent starting announcing our IPs in such a way that inbound traffic wouldn’t use the Cogent path.

- The ISP reverted their change.

Long Term Solution

The better longer term solution was for Sparkart to start using BGP on our router to announce our IPs out both Internap and Cogent giving us better control over routing. I didn’t know much about BGP at the time so I was really excited to learn about how it worked and to implement it. Unfortunately, the router that we were using needed a RAM upgrade in order to be able to hold the entire routing table which wasn’t completed before I left Sparkart.

Let’s use Gentoo Linux!

That time I thought we should install Gentoo on all of our new servers!

Near the end of my time at Sparkart I was setting up a stack of ~6 new rackmount servers before moving them to the data center and thought it would be a good idea to use Gentoo Linux on them. I discussed this with the team and convinced them that it was a good idea.

One of the big features of Gentoo (at least at the time) is that it’s Portage package manager compiles everything from source specifically targeting your hardware. So if you had a newer processor with additional instruction sets (e.g. MMX, 3DNow!, SSE, SSE2, etc.) the compiler could produce assembly taking advantage of those additional instruction sets without worrying about compatibility. This, theoretically, allowed you to squeeze the maximum amount of performance out of your hardware compared to using pre-compiled generic x86 binaries.

Sorry to whoever had to maintain those servers after me!

All of that was good in theory. But I remember running into problems whenever I had to run upgrades on the servers. You spent a lot of time compiling software and would sometimes run into weird dependency issues. Probably should have went with Debian!

Moving On

I continued to work for Sparkart for about six months after I graduated from the University of Washington. Sparkart wanted me to move down to the Bay Area to work full-time in their office and I had started looking into apartments in San Rafael, CA.

However, I ended up interviewing with Amazon who made me an attractive offer which I accepted since it meant I could stay in the Seattle area.